A comprehensive review of county-funded mental health programs helped Santa Clara County, California, better understand the services offered across its continuum of care. Building a foundation of evidence helps the county monitor program fidelity – that is, the degree to which a program adheres to its research-based design.

In 2019, Santa Clara County’s Behavioral Health Services Department (BHSD) partnered with The Pew Charitable Trusts’ Results First initiative to assess the evidence base of interventions offered in outpatient settings for adults with mental health issues. Although BHSD was already making data-driven decisions, it hoped to take this work a step further to ensure that the right services were being delivered in the right amount to the right population.

So the department compiled a program inventory, a catalog of programs it funds in county clinics and through contracted community providers. From the start, BHSD intentionally made this process collaborative.

“Relationships with providers [in] Santa Clara County are … critical, [given that] many of our programs and services are contracted out. … As such, buy-in and full engagement with all stakeholders around the potential value of this project … was important to achieve the desired outcome,” said Todd Landreneau, director of adult and older adult services at BHSD.

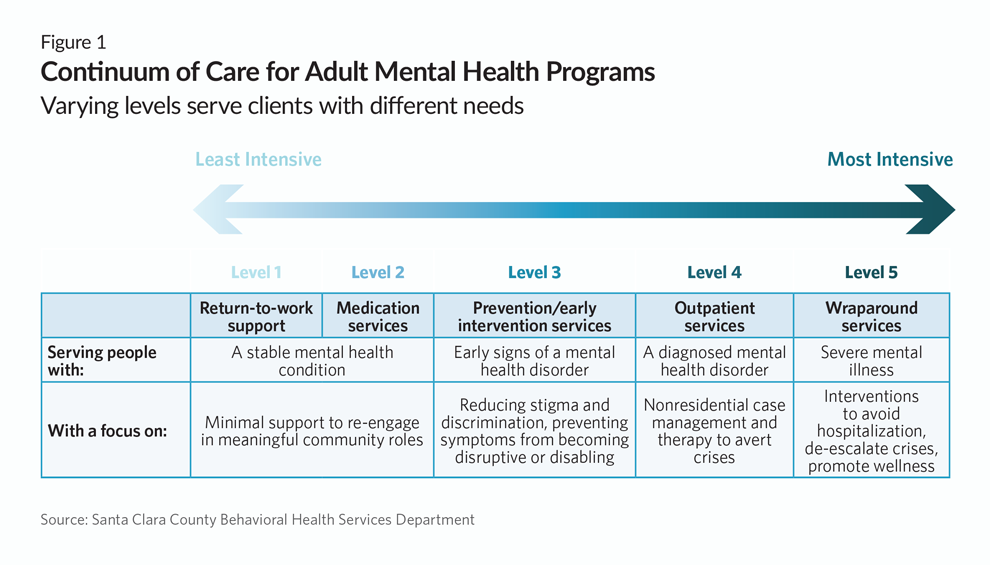

One of the first steps was to map the programs being offered across the department’s continuum of care, which contains several levels of services ranging from less intensive (such as case management for adults with mental health barriers to employment) to most intensive (inpatient treatment for psychiatric disorders). BHSD asked community providers to identify discrete interventions they offered their clients.

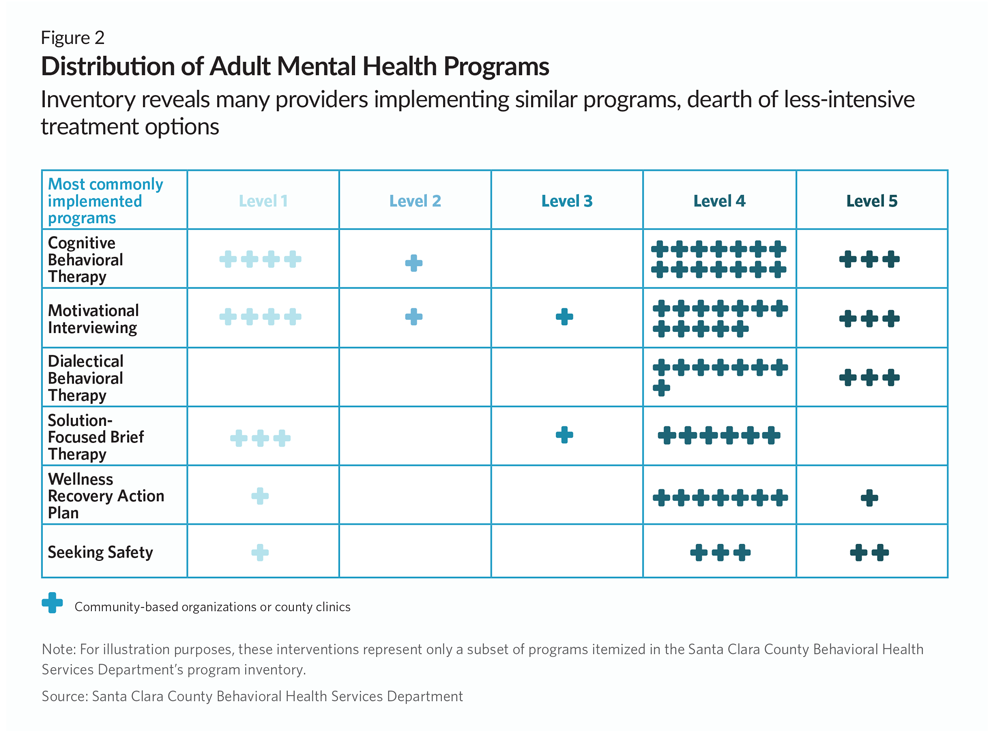

Department staff then convened a small external work group made up of a subset of these providers to validate the list for accuracy. Once this was completed, BHSD and the work group compared the programs in the list to the evidence about what works to reduce mental health symptoms using the Results First Clearinghouse Database. Then they created profiles for the most commonly offered programs, which included fidelity markers such as number and frequency of treatment sessions and target population to be able to compare implementation across providers. For example, according to research, adults with a clinical diagnosis of depression who are receiving cognitive behavioral therapy should meet with their clinician one to two times a week for 12-20 weeks.

Through this process, BHSD identified several opportunities to improve program fidelity and standardize implementation.

- For the first time, BHSD could visualize the concentration of services across the levels of its continuum of care. This helped the department identify gaps in “step-down” treatment options to move lower-risk clients from a more intensive level of care to a less intensive one—a revelation that BHSD is considering in its future procurement plans.

- Through the inventory process, BHSD noticed mismatches between a program’s intended treatment population and the clients receiving the services in Santa Clara. To ensure that programs are being delivered to the clients they were designed to treat, BHSD decided that it wants to find or develop a clinical assessment tool that could be used across providers to better place clients in appropriate services.

- In identifying which programs were offered by multiple providers, BHSD realized that implementation of certain programs was not standardized. For example, the number of sessions offered in a treatment protocol differed from provider to provider. This has led BHSD to consider developing a common terminology for programs, selecting specific program models, revising contracts to encourage use of those models, and offering training to providers to streamline implementation.

- BHSD plans to continually monitor the alignment of currently funded programs with their evidence-based designs—establishing a fidelity monitoring system tied to the department’s inventory to ensure that clients are getting treatment that meets their needs. BHSD intends to start building this system around two programs—motivational interviewing and solution-focused brief therapy—reconsidering the data collected through regular reports, training providers at county clinics on their core components, and applying lessons learned to roll out the same process for other programs and community providers.

“The biggest change to this work is understanding what the concept of ‘evidence-based practice’ means, using data to plan the implementation or termination of a program, and aligning … services [for] effectiveness to both the client and the department,” said Margaret Obilor, division director of adult and older adult services at BHSD.

As Santa Clara’s example shows, program inventories offer jurisdictions a way to map services across their continuum of care, compare implementation across providers, and build a foundation for monitoring fidelity. Santa Clara also demonstrates that involving providers in the process can help jurisdictions collect more accurate information, spread knowledge about program assessment, and ensure buy-in when making decisions based on inventory findings.

Writen by Sara Dube and Abby Hannifan. Sara Dube is a director and Abby Hannifan is a principal associate with the Results First Initiative.

Key Information

Source

Results First™

Publication DateJune 5, 2020

Read Time3 min

ComponentImplementation Oversight

Resource TypeWritten Briefs

Results First Resources

Evidence-Based Policymaking Resource Center

Share This Page

LET’S STAY IN TOUCH

Join the Evidence-to-Impact Mailing List

Keep up to date with the latest resources, events, and news from the EIC.