This brief is one in a series about the five key components of evidence-based policymaking as identified in “ Evidence-Based Policymaking: A Guide for Effective Government,” a 2014 report by the Pew-MacArthur Results First Initiative. The other components are program assessment, budget development, implementation oversight, and targeted evaluations.

Overview

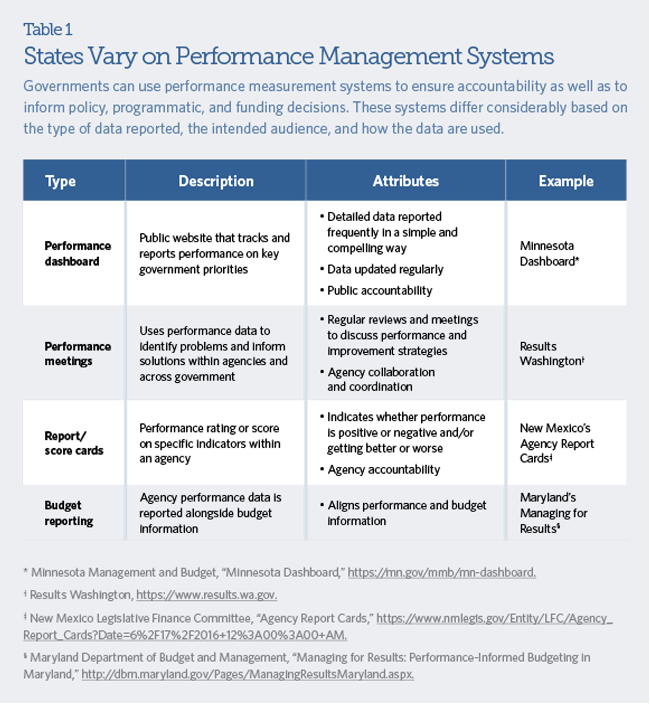

As states continue to face budgetary constraints, policymakers are looking for ways to make government more efficient and effective. Over the past three decades, many governments have developed systems to measure the performance of programs that aim to improve key outcomes in areas such as job creation, child safety, and health. These performance management systems—also called outcome monitoring systems—can help policymakers ensure publicly funded programs are achieving the results that constituents expect.

Effective performance management systems regularly track and report statewide or agency-level progress on key indicators to help determine whether government programs are working as intended.1 These systems provide useful performance information to staff and managers to help direct resources or attention to areas needing improvement. They can also help policymakers make informed policy and budget decisions, mitigate risk by identifying underperforming programs, and strengthen accountability by providing constituents with clear information on the effectiveness of services and by tracking progress on important measures of community health and well-being.

Although nearly every state has some type of outcome monitoring system in place, many face challenges in using them to inform decision-making. State agencies frequently spend significant resources to collect and report performance data that may not always be useful to decision-makers. At the same time, policymakers may lack information they need to make important policy and funding decisions. States also face challenges in coordinating these systems with other performance-related capacities. For example, many states have staff dedicated to research and evaluation, policy analysis, and other initiatives aimed at streamlining government processes that could be used together to make better decisions but are often fragmented.

This brief highlights key ways states are using performance data to improve programs and services, inform budget and policy decisions, and ensure accountability. It then identifies four actions to address challenges and improve performance management systems:

- Identify appropriate objectives, measures, and benchmarks.

- Analyze and report targeted performance information.

- Create opportunities to make better use of performance data.

- Coordinate and combine outcome monitoring with other evidence-based policymaking efforts.

How states use performance data to inform decision-making

Systems dedicated to monitoring the performance of state-funded programs and resources are crucial to applying an evidence-based policymaking framework to government activities. Some states focus their outcome monitoring efforts on continually improving the delivery of programs and services, while others use the information to strategically allocate resources or to reinforce public accountability for spending taxpayer dollars. Each system has unique characteristics and is tailored to state goals and objectives. The examples below highlight some of the innovative practices the Pew-MacArthur Results First Initiative identified where states have been able to use performance data to make more informed decisions and improve services to residents. Note that in many cases the states use their performance management systems for multiple purposes and not solely the purpose highlighted below.

Identify problems early

Outcome monitoring systems are commonly used to track the performance of key programs over time— measuring short- or long-term outcomes—and can be particularly useful in detecting areas where performance is below acceptable standards, as well as whether things are improving or getting worse. To advance their systems, states can establish benchmarks and targets for expected performance, use actual and historical data to track progress, and include narrative information to understand the context for a program’s results (for example, a major weather event or the implementation of a new initiative).

In New Mexico, agencies report on their performance to the Legislative Finance Committee (LFC) and the governor’s budget office each quarter. LFC staff analyze that information and issue reports to the committee members and public on how state government is operating. Performance reports can highlight areas needing attention or areas doing well. Where data indicate programs that need attention, staff may conduct follow-up analyses to identify causal factors or workload challenges, or conduct a program evaluation to pinpoint what is wrong and how to improve it. The committee also has agencies’ representatives testify in public hearings on their performance reports and action plans for improvement. “We use outcome monitoring as a surveillance tool,” said Charles Sallee, deputy director for program evaluation at the LFC.2 “The committee looks at performance data to highlight programs needing additional attention; we benchmark those programs and look at data over time. This tells us if performance isn’t moving in the right direction, and then we have to figure out why and what to do about it with the agency.”

Inform strategies for improvement

Information about underperforming programs or negative trends can lead to deeper discussions about the programs and strategies currently deployed in a state and what could be improved. To help drive regular use of performance data, some states have forums in place to discuss this information with leadership and develop potential solutions.

In Colorado, the state Department of Human Services (CDHS) tracks more than 75 performance measures across the department’s five divisions through a performance management system called C-Stat. Each month agency executives hold meetings with leaders from each programmatic office, using performance data from C-Stat to determine and discuss what is working and what needs improvement, and to develop appropriate strategies that lead to outcome improvement. “Once you collect data, everyone starts paying attention to it more,” said Melissa Wavelet, who directs the Office of Performance Management and Strategic Outcomes, which oversees C-Stat.3 Using information collected through C-Stat, CDHS has achieved many outcome and process improvements,including implementing strategies that reduced the use of isolation for chronically mentally ill patients in one of the state’s psychiatric hospitals by 95 percent over a five-year period and anti-psychotic medications by individuals at a State Veterans Nursing Home from 14 percent of the residents to just 8 percent.

Target resources to areas of greatest need

States can use performance data to inform funding and resource allocations. When broken down geographically, the data can help users identify counties, neighborhoods, or populations where additional resources or attention are needed to help improve outcomes or address disparities.

Get Healthy Idaho: Measuring and Improving Health is the state’s combined state health assessment and improvement plan. The plan, developed by the Idaho Department of Health and Welfare, Division of Public Health, targets key program areas related to increasing access to care and decreasing rates of diabetes, tobacco usage, and obesity. The state contracts with local public health agencies and health care systems to provide services to residents and uses data collected through this work to inform future funding and activity decisions. The division’s Population Health Workgroup uses population data on Idaho’s Leading Health Indicators, as well as progress in each of the targeted program areas. “At the state level, when we get a grant from CDC or another federal agency, we then have to decide how to distribute across districts,” said Elke Shaw-Tulloch, administrator of the Division of Public Health.4 “This performance data helps us to target those dollars more strategically.”

Track progress on strategic planning efforts

Many governments use strategic planning to identify priorities, establish common goals, and mobilize resources to help achieve those goals, but do not have a clear mechanism for tracking progress. As a result, it’s difficult to ensure that key goals and objectives are achieved or key stakeholders are held accountable for the goals they set. Outcome monitoring systems are one option to regularly and transparently measure and track progress of statewide strategic plans.

In Connecticut, the Department of Public Health created its Healthy Connecticut 2020 performance system to track progress in implementing key goals outlined in its state health improvement plan (SHIP). Developed in concert with more than 100 coalition partners, the SHIP identifies focus areas to improve health promotion and disease prevention. Action teams meet every other month and use data from the dashboard to measure the state’s performance against the goals. Performance data also help action teams identify areas for improvement and discuss new strategies. “When action teams create their agendas, they review the objectives in the SHIP and continually check in to see where we are as a state and to make sure we are headed in the right direction,” said Sandra Gill, a project consultant for Healthy Connecticut 2020.5

Actions states can take to improve their performance management systems

While use of performance management in state government has advanced considerably over the past two decades, many states still struggle to implement systems that collect and report accurate data, measure meaningful results, and analyze and present information in ways that make it actionable. States vary widely in the type of data they collect and report. While some states have made significant strides in measuring the impact of their work, others continue to report only on outputs. For example, a scan of Vermont’s performance reporting system found that strategic outcomes and performance measures were available for approximately 10.5 percent of all programs.6 Similarly, some states have made performance management a top priority and regularly use outcome data to gauge progress of government programs, while in other states reporting performance data can be a compliance exercise and data are rarely used to make decisions.

The following section highlights actions that states can take to maximize the effectiveness of their outcome monitoring systems. States can often build on the systems and processes already in place for measuring performance. An assessment of the existing system—including a review of promising practices and aspects needing attention—is a good place to start and, over time, states can address areas for improvement.7 While these efforts often require additional investment of staff time and in some cases additional resources, states can reap significant benefits through more efficient and effective services, and enhanced public accountability.

Action 1: Identify appropriate objectives, measures, and benchmarks

Developing meaningful and accurate outcome measures is crucial to creating an effective performance management system. In some cases, metrics may have been developed years earlier and may no longer reflect the current state of the field. In other cases, states may have initially developed process measures because they lacked the data necessary to track the results those programs aimed to achieve. For example, only 24 states have policies in place to regularly review or audit performance indicators.8 Over the last two decades, research has been conducted on what outcomes are important to track and how programs or policies can be expected to affect those outcomes. States can draw on this research to inform their own systems.9

Use research to identify appropriate long-term outcomes

An effective outcome monitoring system also measures long-term outcomes relevant to a state’s objectives, such as growing the economy, developing a well-educated public, having healthier residents, and creating safer communities. States can use existing research as a starting point to develop these metrics, and then tailor them to their specific key priorities (see Appendix A for guidance on developing and measuring outcomes).

Healthy People 2020, a federal initiative coordinated by agencies within the U.S. Department of Health and Human Services, provides a comprehensive set of science-based, 10-year national goals and objectives for improving the health of all Americans. Thirty-eight states and the District of Columbia have adopted these goals based on their local needs and developed advanced systems to track those key indicators over time.10 One of those states, Connecticut, used this framework to develop long-term outcomes for its health improvement plan— such as reducing the rate of unplanned pregnancies as well as the rate of adults with high blood pressure—to track how residents are faring across their lives in areas such as heart disease, obesity, obtaining vaccinations, and exposure to environmental risks. These outcomes were integrated into the state health improvement plan process and tracked through a performance dashboard for transparency and accountability with partners and the public over progress in meeting health improvement targets.

Identify short- and intermediate-term measures that are predictive of long-term success

For some outcome measures, success can only be determined after an extended period of time, making it difficult to know whether a program is working as intended. To ensure that programs are on track, agencies should also identify short- and intermediate-term indicators, which could include a mix of process and outcome measures that are determined, appraised, and reported more frequently. For evidence-based programs, agencies can track key measures to ensure the programs are effectively delivered according to their design.

In Colorado, the Department of Human Services recently started discussing the state’s family support and coaching programs, also known as home visiting programs, through their performance management system (C-Stat), but found that program outcomes were challenging to measure because they were far into the future. Thus, the department identified and began tracking several process measures related to program implementation that research shows are critical precursors to achieving program goals, such as timeliness of services and compliance with statutory requirements. “We wanted to see if individual providers were conducting monthly visits as prescribed, and we found out that no, even this evidence-based program wasn’t operating uniformly,” said Wavelet, whose office oversees C-Stat.11 Program staff and leadership worked closely with the intermediary responsible for directly managing the providers and contracts, connecting them to national technical assistance opportunities. The state is now interested in potentially tracking implementation of evidence-based models such as juvenile justice to provide better assurance that programs are on track to achieve positive outcomes. “We’ve just begun scratching the surface of what’s evidence-based and what’s not,” said Wavelet.

Assess whether existing programs and strategies align with long-term outcomes

Once high-level goals and metrics have been established, states can examine the extent to which the programs and services they operate are achieving those goals. For evidence-based programs, states can use existing research to identify which measures to track. For programs that have not yet been rigorously evaluated, governments can use logic models,12 tools that can be used to establish links between programs and the outcomes they aim to achieve. Through this review, agencies may discover that some of the programs they operate are not designed to achieve critical outcomes and can begin to make improvements.

Illinois developed a comprehensive outcome monitoring methodology, called Budgeting for Results (BFR), to evaluate roughly 400 state programs based on their alignment with expected outcomes, return on investment, and predicted effectiveness, according to research and performance data. In July 2017, the Governor’s Office of Management and Budget piloted this new approach in adult criminal justice. BFR began by comparing three current programs intended to reduce recidivism by individuals leaving state prison to a national research database to determine which programs were evidence-based and had been shown effective in addressing this key outcome. The analysis revealed that the three programs—correctional post-secondary education, correctional basic skills education, and vocational education in prison—were effective at reducing recidivism. Based on these findings, BFR offered recommendations to further improve the programs by setting long-term goals, including targets and timelines, and expanding the use of these tools to other policy domains identified as state priorities by the governor, such as health and education.13

Benchmark performance to help drive improvement

Measuring results against benchmarks—a standard or reference point for comparing performance—and using historical data to show long-term trends can provide important context for policymakers to understand performance and whether it is improving, getting worse, or staying the same. Benchmarks may include industry standards or best practices (often set by the federal government or research-based advocacy organizations), or program performance in similar states. In addition, states may choose to set their own targets, which can motivate program staff and managers to improve performance by establishing clear expectations and goals. Targets must be appropriately contextualized to ensure comparisons are meaningful, given that numerous factors could be influencing results. For example, a job placement program with a significant portion of formerly incarcerated clients should not be directly compared to a program that serves a different population without accounting for this factor, potentially skewing the results.

In Florida, the Department of Children and Families (DCF) publishes an annual review of the state’s efforts to prevent child abuse, neglect, and abandonment by reporting on key performance indicators.14 The report compares Florida’s performance across 18 measures to all 50 states, using high-quality, verified data made available through organizations such as the Annie E. Casey Foundation’s KIDS COUNT network and the federal Administration for Children and Families, as well as the agency’s own data. The data provide the state with a better understanding of how Florida compares to its peers, as well as the national average, to guide strategies for performance improvement toward identified outcomes.

Action 2: Analyze and report targeted performance information

When feasible, states should disaggregate performance data—including by geographic region, provider, or population characteristics—to enable them to more quickly identify problems and develop targeted strategies to address them. Statewide performance data of any program can mask significant differences across populations and geographic areas. Understanding these differences is critical to determining whether the program is working for certain demographic or geographic populations but not others, and for targeting funding to support the program where it is most needed. For instance, the statewide rate of youth engaged in underage drinking, a common performance metric for state health departments, may lead policymakers to conclude that existing programs to address this issue are working well across the entire state, when in fact the problem may be more widespread in certain communities or schools than others.

States can disaggregate data to:

- Compare performance across jurisdictions: While statewide outcome metrics are important to comparing performance across agencies and programs, county-, regional-, or city-level data can inform policies to improve practices and ensure all areas in the state receive high-quality, effective services.In New York state, health department staff members regularly share jurisdiction-level data from their Prevention Agenda, the state’s health improvement plan, with local health departments, regional planning committees, and local hospitals to help inform program improvements. “The [Prevention Agenda] dashboard provides county and sub-county level data so they can focus on specific areas to provide more aggressive interventions. At the local level, for those that don’t have the capacity to run the data or assess their needs, this dashboard provides them very valuable information,” said Trang Nguyen, deputy director of the Office of Public Health Practice, which oversees the Prevention Agenda work.15

- Compare performance across providers: When states contract with community-based nonprofit or private organizations to deliver services, having data that allow for comparison of their performance can be an important tool for driving program improvement.The Florida Department of Children and Families (DCF) publishes a monthly16 review of the state’s efforts to prevent child abuse, neglect, and abandonment by reporting on key performance indicators. The monthly report provides disaggregated data for 27 performance indicators by child welfare community-based care (CBC) agencies across the state. Leadership relies on this report when identifying priority of effort initiatives. It also guides strategic conversations and planning for meaningful and sustainable performance improvement toward identified outcomes. In addition, DCF maintains a CBC Scorecard17 that tracks the performance of the 18 CBC agencies contracted to provide all prevention, foster care, adoption, and independent living services to children and families in the child welfare system across the state. The measures the state tracks are based on indicators developed by the Children’s Bureau in the U.S. Administration for Children and Families, centered around three goals: safety, permanency, and well-being. Those measures, along with key child welfare trends, are tracked in their Child Welfare Dashboard18 and can be filtered by region, circuit, county, or CBC lead agency.

- Compare performance across population groups: Population-level data broken out by demographic characteristics such as gender, race/ethnicity, and socioeconomic status can provide decision-makers with important information when determining how to allocate resources across the state.Minnesota’s statewide dashboard tracks 40 key indicators across eight priority areas ranging from strong and stable families and communities to efficient and accountable government services. Within each indicator, the Minnesota Management and Budget (MMB) office reports the data as a statewide aggregate, as well as disaggregated by groups, such as race/ethnicity, gender, income, geographic location, and disability status. Myron Frans, commissioner of MMB, explained, “It’s an expectation [among policymakers] that we disaggregate our data, especially with regards to racial, socioeconomic, and geographic factors. Things can look great in the aggregate, but not so great when you drill down. We break out the data so that we can improve the services we provide.”19 The state also provides guidance on incorporating this performance management framework into budget documents to increase the accessibility and utility of the information to policymakers.20

Action 3: Create opportunities to make better use of performance data

While collecting and reporting performance data are important, getting policymakers to use the information is not a foregone conclusion; it requires careful planning and consideration. To make the most of these data, policymakers and key stakeholders must have regular opportunities to meet and discuss what the data are showing, including what strategies are being employed and ideas for improvements. Relatively few states have forums in place to regularly discuss performance data throughout the year—for example, 29 states continue to report outcomes as part of their budget process, either annually or semiannually, which can limit the ability of agencies to use the information to improve the services and programs they provide.21

Regularly scheduled, ongoing meetings between leadership and agencies focused on using performance data to drive discussions on how to continually improve outcomes can be useful. Participants discuss progress made on key goals, including areas where performance is lagging, and strategies to address them. Several states use processes to engage entities across government on performance improvement, such as PerformanceStat,22 to reinforce accountability and to solve problems collaboratively.

In Washington, the team in charge of the state’s performance management system, Results Washington, coordinates monthly meetings to bring together agency directors and representatives from the governor’s office to discuss progress in meeting statewide objectives, as well as strategies to address areas needing improvement. “I can’t think of a major problem that can be addressed by a single agency or that doesn’t include another partner outside of state government. Our plan is to bring those people into these problem-solving conversations. …Performance data can help to frame those discussions,” said Inger Brinck, director of Results Washington.23 Until recently, meetings have focused on one of five goal areas and utilized data from performance dashboards that track the progress of 14 strategic priorities. Washington is now broadening these meetings to focus on how multiple agencies and partners outside of government can come together to address a particular problem, such as homelessness.

While ongoing meetings provide one avenue for using performance data to problem solve and develop strategies for improvement, states can also hold regular convenings—annually, semiannually, or quarterly—to review historical and current performance and plan for the future. This option may be more feasible in states that either don’t have the capacity to coordinate weekly or biweekly meetings—which often require significant preparation and data analysis—or in cases where the outcome measures being tracked change relatively slowly.

In Wisconsin, all cabinet agencies report quarterly performance data to the governor’s office, and then leaders from agencies, the governor’s office, and the Department of Administration hold semiannual meetings to discuss each agency’s progress on goals and performance measures. In preparation for the meetings, department staff help prepare questions for agencies based on anomalies in the performance data to identify problems and use that information to improve services.24

Action 4: Coordinate and combine outcome monitoring with other evidence-based policymaking efforts

Measuring program performance through outcome monitoring is an important component for any state implementing an evidence-based policymaking approach. However, states should be careful not to rely solely on performance data to make critical programmatic, policy, or funding decisions. “Performance information will not replace all the decision-making that goes into the budget process, nor should stakeholders expect it to,” said Charles Sallee, deputy director for program evaluation at New Mexico’s Legislative Finance Committee (LFC). “For example, if you need to cut budgets and your child protective services program shows struggling performance, you might not want to cut it. Programs that always meet the mark might not need more money, especially if they have less challenging performance targets. So, nothing is automatic.”

While still in the early stages, some states are beginning to see the benefits of aligning various efforts aimed at improving government performance to give leaders a more complete picture of how government programs are performing, where programs are effective and where they are not, and where resources are most needed. These efforts include information from performance reporting systems, research clearinghouses that systematically assess the evidence of programs, implementation and contract oversight offices, process improvement initiatives, and legislative research offices that frequently conduct outcome evaluations of state programs.

In New Mexico, the LFC encourages its staff to leverage multiple sources of evidence—including performance data, local program evaluations, and national research clearinghouses, along with cost-benefit analyses—when making budget recommendations. In the early 2000s, the LFC created a Legislating for Results framework, which outlines a systematic approach to using research and performance data to inform the budget process.25 Key steps include:

- Use performance data to identify underperforming programs. Throughout the year, analysts use statewide performance indicators to identify policy areas and outcomes that are underperforming and require additional review. These surveillance efforts, which are detailed in quarterly report cards issued to each major agency, help the LFC select priority areas for subsequent analysis and evaluation.

- Review program effectiveness information. After selecting priority areas, LFC analysts then review existing research on the effectiveness of the programs currently operated in that policy area. To do this, analysts use research clearinghouses, which review and aggregate impact studies to rate programs by their level of evidence of effectiveness. These in-depth analyses help the LFC determine the strengths and weaknesses of current resource allocations and form the basis for subsequent budget recommendations.

- Develop budget recommendations. Per annual budget guidelines,26 analysts use performance data, program evaluation results, and cost-benefit analysis to identify which programs are most likely to yield both desired outcomes and a return on taxpayer investment. The analysts then use this information to help craft budget recommendations. Programs identified as cost-effective have seen their funding maintained or increased, while funds are shifted away from programs shown not to work or not to generate returns on taxpayer dollars.

- Monitor program implementation. Analysts use performance reports and other tools to track the core components of each program and ensure implementation is consistent with the program’s original design.

- Evaluate outcomes. Analysts compare program outcomes against set targets—benchmarked against achievements by other states, industry standards, national data, and findings from existing program evaluations—to determine whether programs are achieving desired results.

Through this process, the state has been able to systematically analyze and fund innovations within a number of high-priority policy areas. For example, after observing consistently low literacy rates among New Mexico’s children, identified through the state’s performance monitoring, LFC analysts reviewed data to determine the potential causes and propose programmatic solutions supported by strong research. The state identified prekindergarten as an effective investment and conducted a program evaluation that demonstrated positive, substantial long-term results. The LFC then recommended an additional $28 million for early childhood programs, including $6.5 million for pre-K,27 and found that the percentage of program participants reading at grade level in kindergarten and third grade had improved.28

In Vermont, the governor recently empowered the chief performance officer to coordinate several of the state’s disparate government improvement efforts, including the development of a performance dashboard to measure progress on statewide priorities and the use of Lean, a process framework to improve government services, and Results-Based Accountability (RBA), an approach to identify and track outcomes of government programs based on their impact on individuals and to communities. As part of Executive Order 04-17, this position directs all state agencies to adopt continuous improvement strategies, develop a “living” strategic plan, regularly monitor outcomes identified in the plan, and provide opportunities to build this capacity throughout government.

A key part of the new system, called Program to Improve Vermont Outcomes Together (PIVOT), involves training state agency staff on various aspects of continuous quality improvement, including RBA and Lean. “We might use performance data in a discussion about why we are getting particular results, which could lead to a discussion about which processes need to be streamlined, where we can use Lean,” said Susan Zeller, the chief performance officer for the state.29 “You will not always Lean your way to better results. But you also won’t always RBA yourself to more efficient processes. So, we put them together.”

In Minnesota, MMB used performance data, along with evidence and research, to guide the development of its 2018 biennial budget. Staff from MMB convened regular interagency conversations to examine how their budget proposals aligned with priority outcomes identified by the governor, using national research clearinghouses and other sources of high-quality research, as well as performance data from the Minnesota Dashboard, which tracks 40 key indicators to measure the state’s well-being. The governor’s fiscal year 2018 budget included several new initiatives supported by strong evidence, including an initiative to address homelessness among students called Homework Starts with Home. “We have an obligation to make sure that taxpayer resources are being used in the most effective way possible. We use research on the evidence of program effectiveness to help us achieve that goal,” said Frans, commissioner of MMB.30

Creating and sustaining a data-driven culture

As highlighted throughout this brief, a systematic approach to performance management is crucial to the success of statewide goals, priorities, and programs over time. Since leadership change is inevitable in state government, performance management systems must also be amenable to changing missions and strategic priorities.31 There are some key ways to maintain and strengthen outcome monitoring systems amid change:

- Engage leadership and maintain support. In addition to requiring regular reporting and analysis of performance information through law, leaders need to be engaged with the work to promote change. Support from both executive and legislative leaders, through either an executive order or a law, can help validate these efforts across the state. Many states, such as Illinois, Wisconsin, New Mexico, and Vermont, have implemented executive orders or laws requiring performance reporting, and have found such actions beneficial to support improvement with a “top-down vision, bottom-up ideas,” as characterized by Susan Zeller, the chief performance officer of Vermont.32

- Train staff to use performance data. States can train agency staff to use performance data in their day-to- day operations to further cement a culture of continuous improvement. Some states host training academies, such as Colorado’s six-day Performance Management Academy.33 In other states, the executive office makes training a priority by committing to train a certain number of staff each year, such as the Vermont Agency of Administration’s commitment to developing a training plan for all new employees (within six months of hire), while continuing to train up to 50 percent of all staff by 2023 across state agencies, with champions from each agency comprising a performance steering committee.

- Support agency collaboration in achieving shared goals. Several states identify a set of key priorities or goals for a policy area, many of which involve the contributions of multiple agencies. Holding agencies accountable to achieve these collective goals through ongoing performance reporting can spur greater collaboration and promote coordination of services and funding to help solve complex challenges.

- Combine performance management with other government improvement efforts. Many governments are thinking about how traditional performance data can be combined with evaluation findings, cost-benefit analyses, the Lean process improvement framework, and other similar efforts, to get a more complete picture of performance within the state. Kelli Kaalele, the director of the Office of Policy within the Wisconsin Department of Administration, underscored the importance of driving holistic action: “The governor introduced a lot of executive orders that tie into transparency, lean government, and this dashboard. Those have really helped move the state forward into getting into evidence-based policymaking; it has started to change the culture of our state government in a positive way.”34

Conclusion

As jurisdictions strive to increase efficiency, transparency, and accountability within government operations, performance management systems provide an accessible platform to monitor outcomes and inform policymaking statewide. Comprehensive performance management systems track useful metrics and objectives against benchmarks, analyze and report information for key stakeholders, and establish routine ways to use data in decision-making.

While capacity constraints, both human and financial, can make it difficult for some jurisdictions to implement a more robust outcome monitoring framework, governments can focus on more strategically utilizing the information and resources they have to better engage in evidence-based policymaking.

Acknowledgments

We would like to thank Peter Bernardy of Minnesota Management and Budget, Charles Sallee of the New Mexico Legislative Finance Committee, and Kathryn Vesey White of the National Association of State Budget Officers for reviewing and providing insightful feedback on the final report. We’d also like to thank Katherine Barrett and Rich Greene of Barrett and Greene Inc. for their thoughtful review.

Appendix A: Helpful links

Resources for developing and measuring outcomes

U.S. Department of Health and Human Services:

- HRSA Maternal and Child Health, “National Outcome Measures,” https://mchb.tvisdata.hrsa.gov/PrioritiesAndMeasures/NationalOutcomeMeasures.

- The Office of Disease Prevention and Health Promotion, “Healthy People 2020,” https://www.healthypeople.gov.

- Centers for Disease Control and Prevention, “Community Health Assessment for Population Health Improvement: Resource of Most Frequently Recommended Health Outcomes and Determinants,” https://stacks.cdc.gov/view/cdc/20707.

Sonoma County, California—Upstream Investments, “Report on Best Practices in Other Communities,” http://www.upstreaminvestments.org/documents/RBPOC.pdf.

The Urban Institute, “The Nonprofit Taxonomy of Outcomes: Creating a Common Language for the Sector,” https://www.urban.org/sites/default/files/taxonomy_of_outcomes.pdf.

Annie E. Casey Foundation, “Outcomes: Reframing Responsibility for Well-Being,” http://www.aecf.org/resources/outcomes-reframing-responsibility-for-well-being.

What Works Cities:

- “Performance Management Getting Started Guide,” https://www.gitbook.com/book/centerforgov/performance-management-getting-started/details.

- “Setting Performance Targets,” https://www.gitbook.com/book/centerforgov/setting-performance-targets-getting-started-guide/details.

National Association of State Budget Officers, “Budget Processes in the States,” https://www.nasbo.org/reports-data/budget-processes-in-the-states.

Performance measurement systems highlighted in this report

- Colorado, C-Stat, https://www.colorado.gov/pacific/cdhs/c-stat.

- Connecticut, Healthy Connecticut 2020, http://www.portal.ct.gov/DPH/State-Health-Planning/Healthy-Connecticut/Healthy-Connecticut-2020.

- Florida, Child Welfare Dashboards, http://www.dcf.state.fl.us/programs/childwelfare/dashboard.

- Idaho, Get Healthy Idaho, http://healthandwelfare.idaho.gov/Portals/0/Health/Get%20Healthy%20Idaho.vers2018.pdf.

- Illinois, Budgeting for Results, https://www2.illinois.gov/sites/budget/Pages/results.aspx.

- Minnesota, Minnesota Dashboard, https://mn.gov/mmb/mn-dashboard.

- New Mexico, Legislative Finance Committee, https://www.nmlegis.gov/Entity/LFC/Default.

- New York, Prevention Agenda Dashboard, https://health.ny.gov/preventionagendadashboard.

- Vermont, PIVOT, http://governor.vermont.gov/content/program-improve-vermont-outcomes-together-executive-order-04-17.

- Washington, Results Washington, https://www.results.wa.gov.

- Wisconsin, Agency Performance Dashboards, https://performance.wi.gov.

Endnotes

- For more definitions of performance measurement, see “GPRA: Performance Management and Performance Measurement,” U.S. Office of Personnel Management, accessed June 11, 2018, https://www.opm.gov/wiki/training/Performance-Management/Print.aspx; Harry P. Hatry, Performance Measurement: Getting Results, 2nd ed. (Washington, D.C.: Urban Institute Press, 2007); The Pew Charitable Trusts, “How States Use Data to Inform Decisions: A National Review of the Use of Administrative Data to Improve State Decision-Making” (2018), http://www.pewtrusts.org/en/research-and-analysis/reports/2018/02/how-states-use-data-to-inform-decisions..

- Charles Sallee (deputy director for program evaluation, New Mexico Legislative Finance Committee), interview with Pew-MacArthur Results First Initiative, Aug. 7, 2017.

- Melissa Wavelet (director, Office of Performance and Strategic Outcomes, Colorado Department of Human Services), interview with Pew-MacArthur Results First Initiative, Aug. 18, 2017.

- Elke Shaw-Tulloch (administrator, Division of Public Health, Idaho Department of Health and Welfare), interview with Pew-MacArthur Results First Initiative, Sept. 11, 2017.

- Sandra Gill (project consultant, Healthy Connecticut 2020), interview with Pew-MacArthur Results First Initiative, Aug. 22, 2017.

- State of Vermont Agency of Administration, “Memorandum, RE: Submission of Targeted Action Plans and PIVOT Update” (June 20, 2017), http://spotlight.vermont.gov/sites/spotlight/files/Performance/PIVOT/TAP_Gov_Submission_PIVOT_Update_June2017_FINAL.pdf.

- For more information on ways states use performance measures, see Harold I. Steinberg, “State and Local Governments’ Use of Performance Measures to Improve Service Delivery” (2009), https://www.agacgfm.org/Research-Publications/Online-Library/Research-Reports/State- and-Local-Governments-Use-of-Performance-Me.aspx.

- National Association of State Budget Officers, “Budget Processes in the States” (2015), https://www.nasbo.org/reports-data/budget-processes-in-the-states.

- United Way of America, “Measuring Program Outcomes: A Practical Approach” (1996), 132–36, https://digitalcommons.unomaha.edu/cgi/viewcontent.cgi?referer=https://www.google.com/&httpsredir=1&article=1047&context=slceeval; John W. Moran, Paul D. Epstein, and Leslie M. Beitsch, “Designing, Deploying and Using an Organizational Performance Management System in Public Health: Cultural Transformation Using the PDCA Approach” (2013), Public Health Foundation, http://www.phf.org/resourcestools/Documents/Performance%20Mgt%20System%20Paper%20Version%20FINAL.pdf; Judy Clegg and Dawn Smart, “Measuring Outcomes” (2010), National Resource Center, Compassion Capital Fund, http://strengtheningnonprofits.org/resources/guidebooks/measuringoutcomes.pdf; Claire Robertson-Kraft, “Performance Indicators: Tips & Lessons Learned,” accessed June 12, 2018, https://www.fels.upenn.edu/recap/posts/1521.

- Office of Disease Prevention and Health Promotion, “State and Territorial Healthy People Plans,” accessed June 12, 2018, https://www.healthypeople.gov/2020/healthy-people-in-action/State-and-Territorial-Healthy-People-Plans.

- Wavelet interview.

- For more information on how to create a logic model, see Substance Abuse and Mental Health Services Administration, “Understanding Logic Models,” last modified Sept. 8, 2016, https://www.samhsa.gov/capt/applying-strategic-prevention-framework/step3-plan/understanding-logic-models.

- Budgeting for Results Commission, “Budgeting for Results: 7th Annual Commission Report” (2017), https://www2.illinois.gov/sites/budget/Documents/Budgeting%20for%20Results/2017%20BFR%20Annual%20Commission%20Report.pdf.

- Florida Office of Child Welfare, Performance and Quality Management, “Child Welfare Key Indicators Annual Review: FY 2015-16” (2016), http://centerforchildwelfare.org/qa/cwkeyindicator/CWKeyIndAnnualReviewFY-2015-16.pdf.

- Trang Nguyen (deputy director, Office of Public Health Practice, New York State Department of Health), interview with Pew-MacArthur Results First Initiative, Oct. 16, 2017.

- Florida’s Center for Child Welfare, “Child Welfare Key Indicators Monthly Report,” accessed June 12, 2018, http://www.centerforchildwelfare.org/ChildWelfareKeyIndicators.shtml.

- Florida Department of Children and Families, “Community Based Care (CBC) Scorecard,” accessed June 12, 2018, https://www.myflfamilies.com/service-programs/child-welfare/dashboard/.

- Florida Department of Children and Families, “Child Welfare Dashboards,” accessed June 12, 2018, https://www.myflfamilies.com/service-programs/child-welfare/dashboard/.

- Myron Frans (commissioner, Minnesota Management and Budget), interview with Pew-MacArthur Results First Initiative, Feb. 9, 2018.

- Minnesota Management and Budget, “Statewide Outcomes and Results Based Accountability Instructions,” accessed June 12, 2018, https://mn.gov/mmb/assets/RBA-Instructions-Accessible_tcm1059-244343.pdf.

- National Association of State Budget Officers, “Budget Processes in the States.”

- Evidence-Based Policymaking Collaborative, “What You Need to Know About PerformanceStat,” accessed July 9, 2018, https://www.evidencecollaborative.org/toolkits/performancestat.

- Inger Brinck (director, Results Washington), interview with Pew-MacArthur Results First Initiative, Feb. 13, 2018.

- Kelli Kaalele (director, Office of Policy, Wisconsin Department of Administration), interview with Pew-MacArthur Results First Initiative, Oct. 20, 2017.

- New Mexico Legislative Finance Committee, “Legislating for Results,” accessed June 12, 2018, https://www.nmlegis.gov/Entity/LFC/Documents/Accountability_In_Goverment_Act/Legislating%20For%20Results.pdf.

- New Mexico Department of Finance and Administration, “Guidelines to Performance-Based Budgeting,” accessed June 12, 2018, http://www.nmdfa.state.nm.us/uploads/FileLinks/8de07941945d4798bf5ce7fe3c7a00f3/Performance%20Based%20Budgeting%20 Guidelines.pdf.

- New Mexico Legislative Finance Committee, “Evidence-Based Early Education Programs to Improve Education Outcomes” (2014), https://www.nmlegis.gov/Entity/LFC/Documents/Results_First/Evidence-Based Early Education Programs to Improve Education Outcomes.pdf.

- New Mexico Legislative Finance Committee, “2017 Accountability Report: Early Childhood,” accessed June 12, 2018, https://www.nmlegis.gov/Entity/LFC/Documents/Program_Evaluation_Reports/Final%202017%20Accountability%20Report%20Early%20Childhood.pdf.

- Susan Zeller (chief performance officer, Vermont Agency of Administration), interview with Pew-MacArthur Results First Initiative, Feb. 12, 2018.

- Frans interview.

- National Association of State Budget Officers, “Investing in Results: Using Performance Data to Inform State Budgeting” (2014), https://higherlogicdownload.s3.amazonaws.com/NASBO/9d2d2db1-c943-4f1b-b750-0fca152d64c2/UploadedImages/Reports/NASBO%20Investing%20in%20Results.pdf.

- Zeller interview.

- Colorado Performance Management and Operations, “Academy,” accessed June 12, 2018, https://www.colorado.gov/pacific/performancemanagement/academy.

- Kaalele interview.

The Results First initiative, a project supported by The Pew Charitable Trusts and the John D. and Catherine T. MacArthur Foundation, authored this publication.

Key Information

Source

Results First™

Publication DateAugust 16, 2018

Read Time23 min

ComponentOutcome Monitoring

Resource TypeWritten Briefs

Results First Resources

Evidence-Based Policymaking Resource Center

Share This Page

LET’S STAY IN TOUCH

Join the Evidence-to-Impact Mailing List

Keep up to date with the latest resources, events, and news from the EIC.